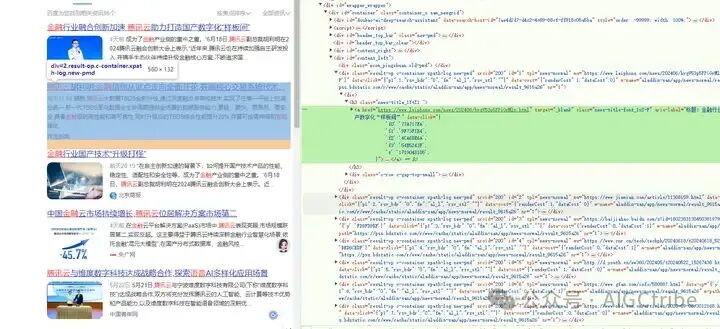

ingFang SC", "Microsoft YaHei", "Source Han Sans SC", "Noto Sans CJK SC", "WenQuanYi Micro Hei", sans-serif;font-size: medium;letter-spacing: normal;text-align: start;text-wrap: wrap;background-color: rgb(255, 255, 255);">打开百度的搜索页面:ingFang SC", "Microsoft YaHei", "Source Han Sans SC", "Noto Sans CJK SC", "WenQuanYi Micro Hei", sans-serif;font-size: medium;letter-spacing: normal;text-align: start;text-wrap: wrap;background-color: rgb(255, 255, 255);">https://www.baidu.com/s?rtt=1&bsst=1&cl=2&tn=news&ie=utf-8&word=%E8%85%BE%E8%AE%AF%E4%BA%91%E6%99%BA%E8%83%BD%E8%AF%AD%E9%9F%B3+++%E9%87%91%E8%9E%8DingFang SC", "Microsoft YaHei", "Source Han Sans SC", "Noto Sans CJK SC", "WenQuanYi Micro Hei", sans-serif;font-size: medium;letter-spacing: normal;text-align: start;text-wrap: wrap;background-color: rgb(255, 255, 255);"> ingFang SC", "Microsoft YaHei", "Source Han Sans SC", "Noto Sans CJK SC", "WenQuanYi Micro Hei", sans-serif;font-size: medium;letter-spacing: normal;text-align: start;text-wrap: wrap;background-color: rgb(255, 255, 255);"> ingFang SC", "Microsoft YaHei", "Source Han Sans SC", "Noto Sans CJK SC", "WenQuanYi Micro Hei", sans-serif;font-size: medium;letter-spacing: normal;text-align: start;text-wrap: wrap;background-color: rgb(255, 255, 255);">

ingFang SC", "Microsoft YaHei", "Source Han Sans SC", "Noto Sans CJK SC", "WenQuanYi Micro Hei", sans-serif;font-size: medium;letter-spacing: normal;text-align: start;text-wrap: wrap;background-color: rgb(255, 255, 255);">查看翻页规律:ingFang SC", "Microsoft YaHei", "Source Han Sans SC", "Noto Sans CJK SC", "WenQuanYi Micro Hei", sans-serif;font-size: medium;letter-spacing: normal;text-align: start;text-wrap: wrap;background-color: rgb(255, 255, 255);">https://www.baidu.com/s?rtt=1&bsst=1&cl=2&tn=news&ie=utf-8&word=%E8%85%BE%E8%AE%AF%E4%BA%91%E6%99%BA%E8%83%BD%E8%AF%AD%E9%9F%B3+++%E9%87%91%E8%9E%8D&x_bfe_rqs=03E80&x_bfe_tjscore=0.100000&tngroupname=organic_news&newVideo=12&goods_entry_switch=1&rsv_dl=news_b_pn&pn=40ingFang SC", "Microsoft YaHei", "Source Han Sans SC", "Noto Sans CJK SC", "WenQuanYi Micro Hei", sans-serif;font-size: medium;letter-spacing: normal;text-align: start;text-wrap: wrap;background-color: rgb(255, 255, 255);">https://www.baidu.com/s?rtt=1&bsst=1&cl=2&tn=news&ie=utf-8&word=%E8%85%BE%E8%AE%AF%E4%BA%91%E6%99%BA%E8%83%BD%E8%AF%AD%E9%9F%B3+++%E9%87%91%E8%9E%8D&x_bfe_rqs=03E80&x_bfe_tjscore=0.100000&tngroupname=organic_news&newVideo=12&goods_entry_switch=1&rsv_dl=news_b_pn&pn=30ingFang SC", "Microsoft YaHei", "Source Han Sans SC", "Noto Sans CJK SC", "WenQuanYi Micro Hei", sans-serif;font-size: medium;letter-spacing: normal;text-align: start;text-wrap: wrap;background-color: rgb(255, 255, 255);">https://www.baidu.com/s?rtt=1&bsst=1&cl=2&tn=news&ie=utf-8&word=%E8%85%BE%E8%AE%AF%E4%BA%91%E6%99%BA%E8%83%BD%E8%AF%AD%E9%9F%B3+++%E9%87%91%E8%9E%8D&x_bfe_rqs=03E80&x_bfe_tjscore=0.100000&tngroupname=organic_news&newVideo=12&goods_entry_switch=1&rsv_dl=news_b_pn&pn=0ingFang SC", "Microsoft YaHei", "Source Han Sans SC", "Noto Sans CJK SC", "WenQuanYi Micro Hei", sans-serif;font-size: medium;letter-spacing: normal;text-align: start;text-wrap: wrap;background-color: rgb(255, 255, 255);">这三个URL都是百度搜索的链接,用于搜索特定关键词的新闻结果。它们之间的规律主要体现在URL参数中的`pn`(页面编号)参数上。ingFang SC", "Microsoft YaHei", "Source Han Sans SC", "Noto Sans CJK SC", "WenQuanYi Micro Hei", sans-serif;font-size: medium;letter-spacing: normal;text-align: start;text-wrap: wrap;background-color: rgb(255, 255, 255);">- 第一个URL的`pn`参数值为40,表示请求的是第40页的新闻结果。- 第二个URL的`pn`参数值为30,表示请求的是第30页的新闻结果。 - 第三个URL的`pn`参数值为0,表示请求的是第1页的新闻结果。 其他参数在这三个URL中都是相同的,包括: - `rtt`:搜索结果排序方式,这里设置为1,可能是指按时间排序。 - `bsst`:是否强制搜索,这里设置为1,表示强制搜索。 - `cl`:搜索类型,这里设置为2,表示搜索新闻。 - `tn`:搜索服务类型,这里设置为news,表示搜索新闻。 - `ie`:输入编码,这里设置为utf-8。 - `word`:搜索关键词,这里设置为“腾讯云智能语音 金融”。 - `x_bfe_rqs`:可能是百度内部使用的请求参数。 - `x_bfe_tjscore`:可能是百度内部使用的参数,用于计算或记录搜索结果的相关性分数。 - `tngroupname`:可能是百度内部使用的参数,用于指定搜索结果的组名。 - `newVideo`:可能是百度内部使用的参数,用于控制视频新闻的显示。 - `goods_entry_switch`:可能是百度内部使用的参数,用于控制商品入口的开关。 - `rsv_dl`:可能是百度内部使用的参数,用于指定搜索结果的显示方式。 这些URL的规律在于它们都是请求相同关键词的新闻搜索结果,但是请求的页面不同,因此`pn`参数的值不同。每页显示的新闻数量可能由其他参数控制,但在这个例子中没有明确指出。通常,每页显示的新闻数量是固定的,比如每页20条或10条新闻。  Deepseek中输入提示词: 你是一个Python编程专家,要完成一个百度搜索页面爬取的Python脚本,具体任务如下: 解析网页: https://www.baidu.com/s?rtt=1&bsst=1&cl=2&tn=news&ie=utf-8&word=%E8%85%BE%E8%AE%AF%E4%BA%91%E6%99%BA%E8%83%BD%E8%AF%AD%E9%9F%B3+++%E9%87%91%E8%9E%8D&x_bfe_rqs=03E80&x_bfe_tjscore=0.100000&tngroupname=organic_news&newVideo=12&goods_entry_switch=1&rsv_dl=news_b_pn&pn={pagenumber} {pagenumber}的值从0开始,以10递增,到40结束; 定位其中所有class="result-op c-container xpath-log new-pmd"的div标签, 定位div标签中class="news-title-font_1xS-F"的a标签,提取其href属性值作为网页下载URL,提取其aria-label属性值,作为网页文件名; 定位div标签中class="c-font-normal c-color-text"的span标签,作为网页的内容摘要; 网页下载URL、网页文件名、网页的内容摘要都写入到Excel文件中,Excel文件保存到文件夹:F:\aivideo\finance 下载网页,保存网页到文件夹:F:\aivideo\finance 注意: 每一步都要输出信息到屏幕上 每爬取一个网址后,随机暂停3-5秒; 每下载一个网页后,随机暂停3-6秒; 设置请求标头: Accept: text/html,application/xhtml+xml,application/xml;q=0.9,image/avif,image/webp,image/apng,*/*;q=0.8,application/signed-exchange;v=b3;q=0.7 Accept-Encoding: gzip, deflate, br, zstd Accept-Language: zh-CN,zh;q=0.9,en;q=0.8 Connection: keep-alive Host: http://www.baidu.com Referer: https://www.baidu.com/s?rtt=1&bsst=1&cl=2&tn=news&ie=utf-8&word=%E8%85%BE%E8%AE%AF%E4%BA%91%E6%99%BA%E8%83%BD%E8%AF%AD%E9%9F%B3+++%E9%87%91%E8%9E%8D&x_bfe_rqs=03E80&x_bfe_tjscore=0.100000&tngroupname=organic_news&newVideo=12&goods_entry_switch=1&rsv_dl=news_b_pn&pn=30 Sec-Ch-Ua: "Not/A)Brand";v="8", "Chromium";v="126", "Google Chrome";v="126" Sec-Ch-Ua-Mobile: ?0 Sec-Ch-Ua-Platform: "Windows" Sec-Fetch-Dest: document Sec-Fetch-Mode: navigate Sec-Fetch-Site: same-origin Sec-Fetch-User: ?1 Upgrade-Insecure-Requests: 1 User-Agent: Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/126.0.0.0 Safari/537.36 Deepseek生成的源代码: import requests from bs4 import BeautifulSoup import pandas as pd import time import random import os # 设置请求头 headers = { "Accept": "text/html,application/xhtml+xml,application/xml;q=0.9,image/avif,image/webp,image/apng,*/*;q=0.8,application/signed-exchange;v=b3;q=0.7", "Accept-Encoding": "gzip, deflate, br, zstd", "Accept-Language": "zh-CN,zh;q=0.9,en;q=0.8", "Connection": "keep-alive", "Host": "http://www.baidu.com", "Referer": "https://www.baidu.com/s?rtt=1&bsst=1&cl=2&tn=news&ie=utf-8&word=%E8%85%BE%E8%AE%AF%E4%BA%91%E6%99%BA%E8%83%BD%E8%AF%AD%E9%9F%B3+++%E9%87%91%E8%9E%8D&x_bfe_rqs=03E80&x_bfe_tjscore=0.100000&tngroupname=organic_news&newVideo=12&goods_entry_switch=1&rsv_dl=news_b_pn&pn=30", "Sec-Ch-Ua": '"Not/A)Brand";v="8", "Chromium";v="126", "Google Chrome";v="126"', "Sec-Ch-Ua-Mobile": "?0", "Sec-Ch-Ua-Platform": '"Windows"', "Sec-Fetch-Dest": "document", "Sec-Fetch-Mode": "navigate", "Sec-Fetch-Site": "same-origin", "Sec-Fetch-User": "?1", "Upgrade-Insecure-Requests": "1", "User-Agent": "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/126.0.0.0 Safari/537.36" } # 创建文件夹 output_folder = 'F:\\aivideo\\finance' if not os.path.exists(output_folder): os.makedirs(output_folder) # 初始化DataFrame df = pd.DataFrame(columns=["URL", "File Name", "Content Summary"]) # 循环请求 for pagenumber in range(0, 41, 10): url = f"https://www.baidu.com/s?rtt=1&bsst=1&cl=2&tn=news&ie=utf-8&word=%E8%85%BE%E8%AE%AF%E4%BA%91%E6%99%BA%E8%83%BD%E8%AF%AD%E9%9F%B3+++%E9%87%91%E8%9E%8D&x_bfe_rqs=03E80&x_bfe_tjscore=0.100000&tngroupname=organic_news&newVideo=12&goods_entry_switch=1&rsv_dl=news_b_pn&pn={pagenumber}" response = requests.get(url, headers=headers) if response.status_code == 200: soup = BeautifulSoup(response.text, 'html.parser') results = soup.find_all('div', class_='result-op c-container xpath-log new-pmd') for result in results: a_tag = result.find('a', class_='news-title-font_1xS-F') if a_tag: href = a_tag.get('href') file_name = a_tag.get('aria-label') span_tag = result.find('span', class_='c-font-normal c-color-text') content_summary = span_tag.text if span_tag else '' df = pd.concat([df, pd.DataFrame([{"URL": href, "File Name": file_name, "Content Summary": content_summary}])], ignore_index=True) # 下载网页 try: web_response = requests.get(href, headers=headers) if web_response.status_code == 200: file_path = os.path.join(output_folder, f"{file_name}.html") with open(file_path, 'w', encoding='utf-8') as f: f.write(web_response.text) print(f"Downloaded {href} to {file_path}") time.sleep(random.uniform(3, 6)) except Exception as e: print(f"Failed to download {href}: {e}") time.sleep(random.uniform(3, 5)) print(f"Processed page {pagenumber}") else: print(f"Failed to fetch page {pagenumber}, status code: {response.status_code}") # 保存到Excel excel_file = os.path.join(output_folder, 'baidu_search_results.xlsx') df.to_excel(excel_file, index=False) print(f"Saved data to {excel_file}") Vscode中运行源代码,成功了:

|