|

OpenAI 首席科学家@hwchung27在MIT演讲中揭示 o1模型训练核心秘密:通过激励模型学习是培养 AGI 系统通用技能的最佳方式。 以下为详情:

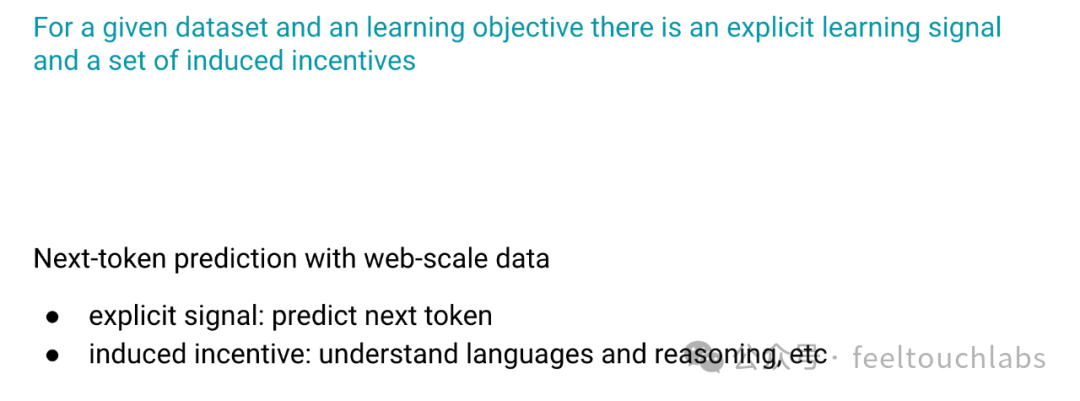

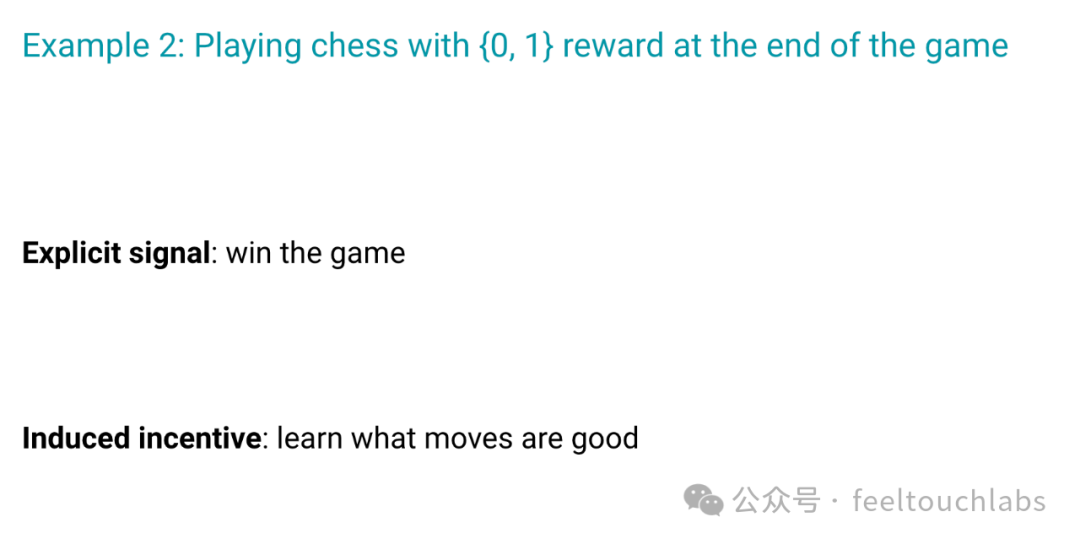

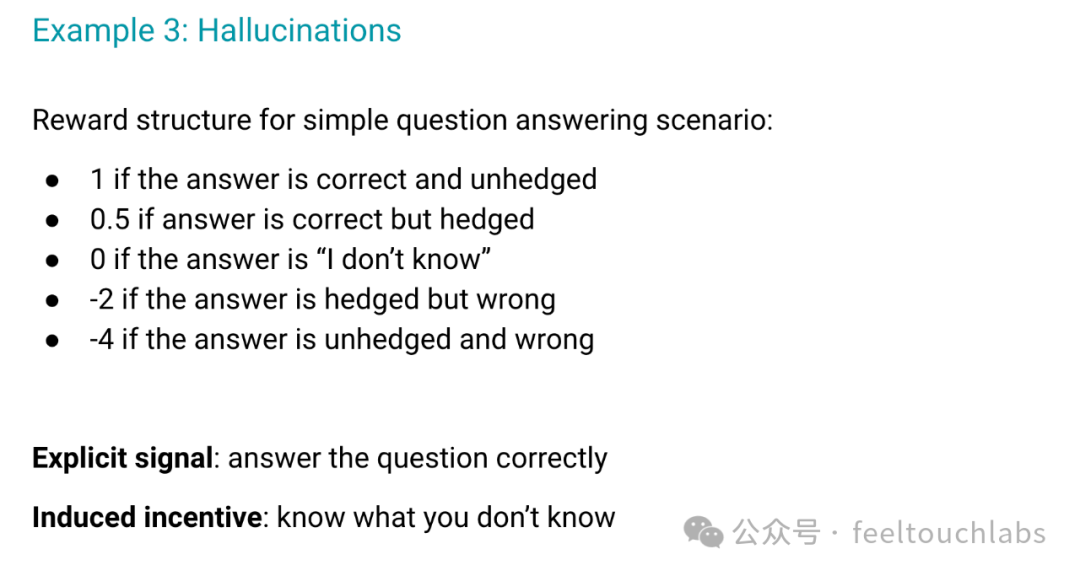

Don’t teach. Incentivize. Non-goal: share specific technical knowledge and experimental results

Goal: share how I think with AI being a running example

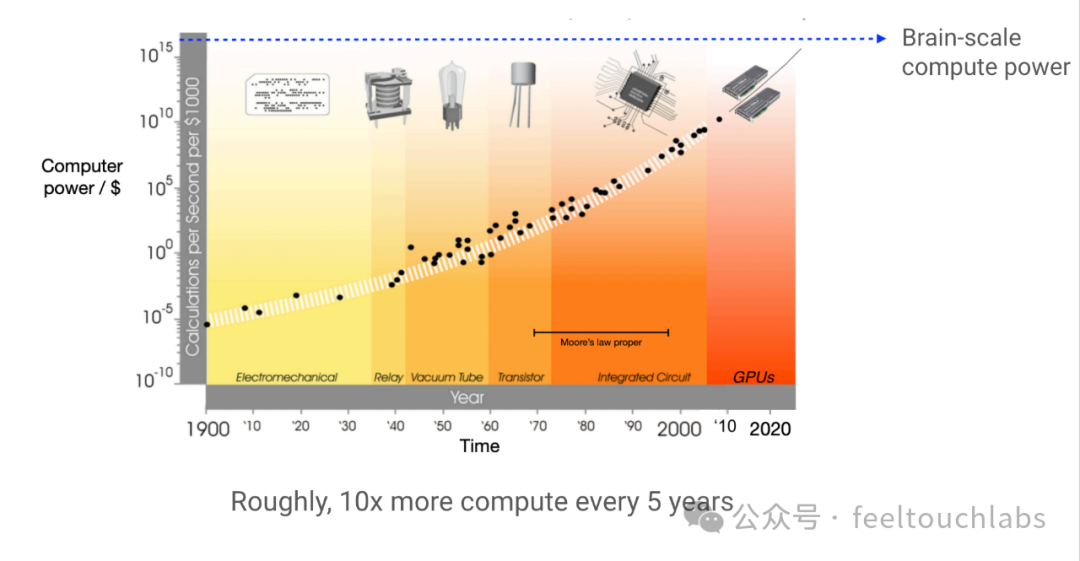

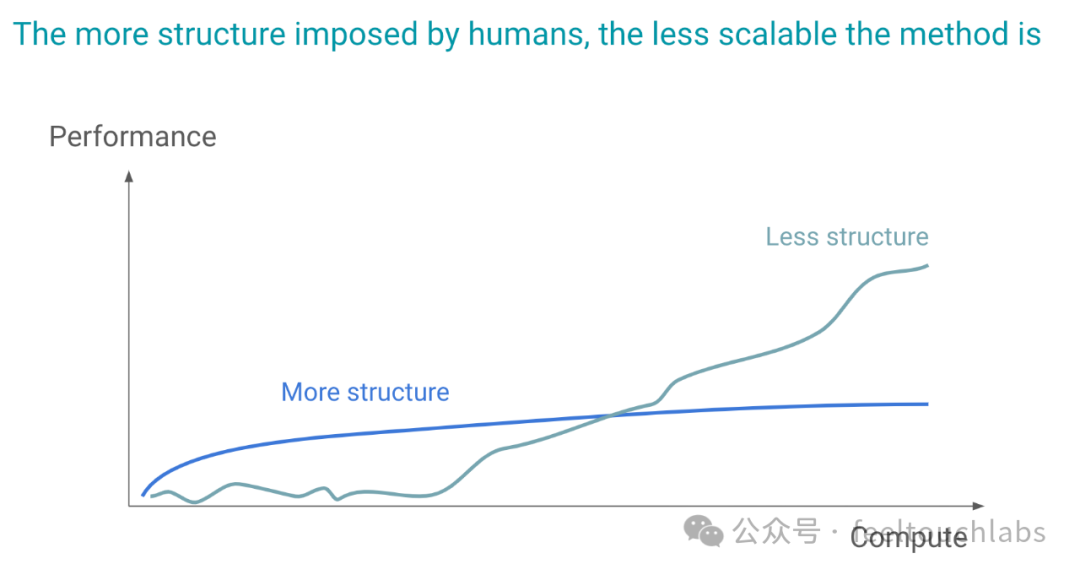

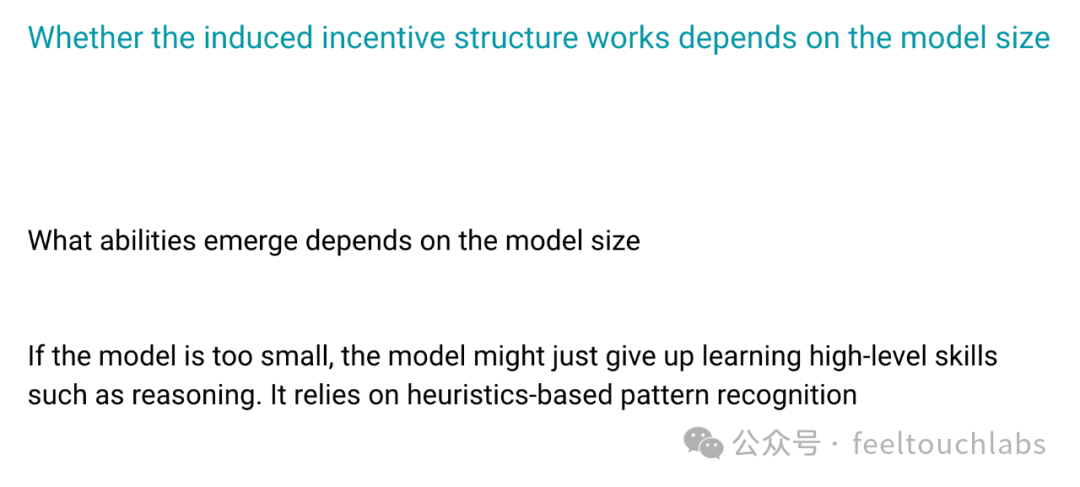

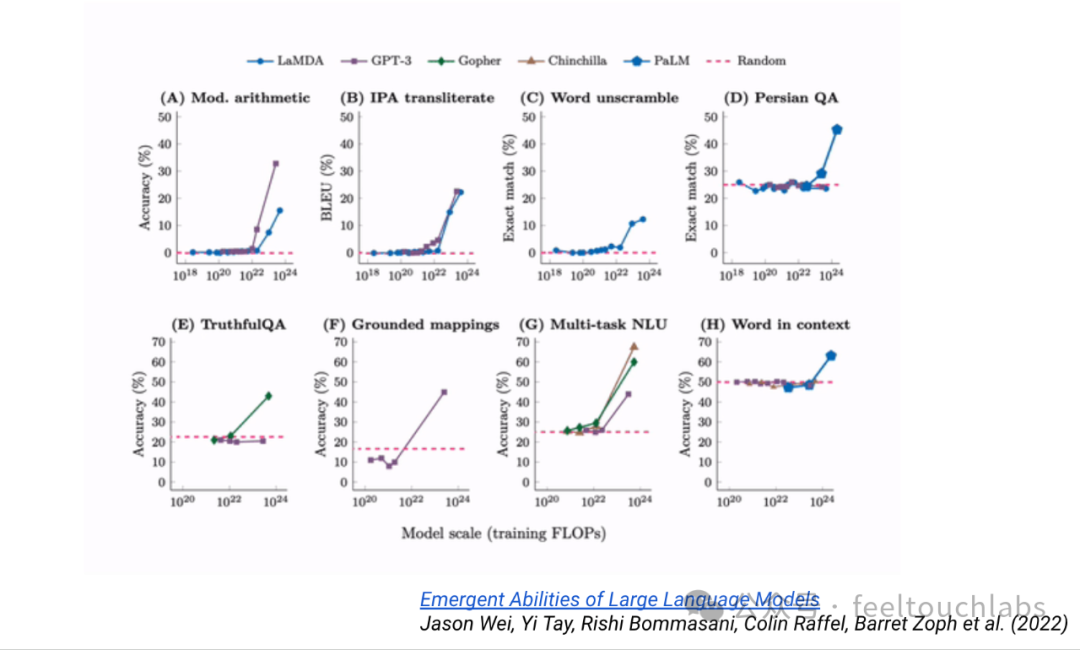

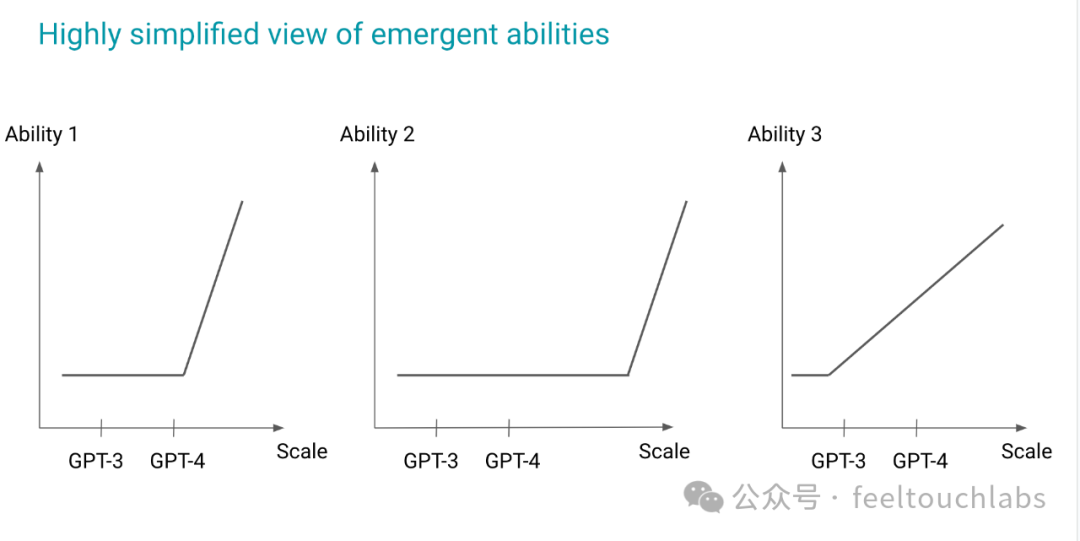

Closing Compute cost is decreasing exponentially AI researchers should harness this by designing scalable methods Current generation of LLMs rely on next-token prediction, which can be thought of as weak incentive structure to learn general skills such as reasoning More generally, we should incentivize models instead of directly teaching specific skills Emergent abilities necessitate having the right perspective such as unlearning 结束语 计算成本正在呈指数级下降

人工智能研究人员应该通过设计可扩展的方法来利用这一点

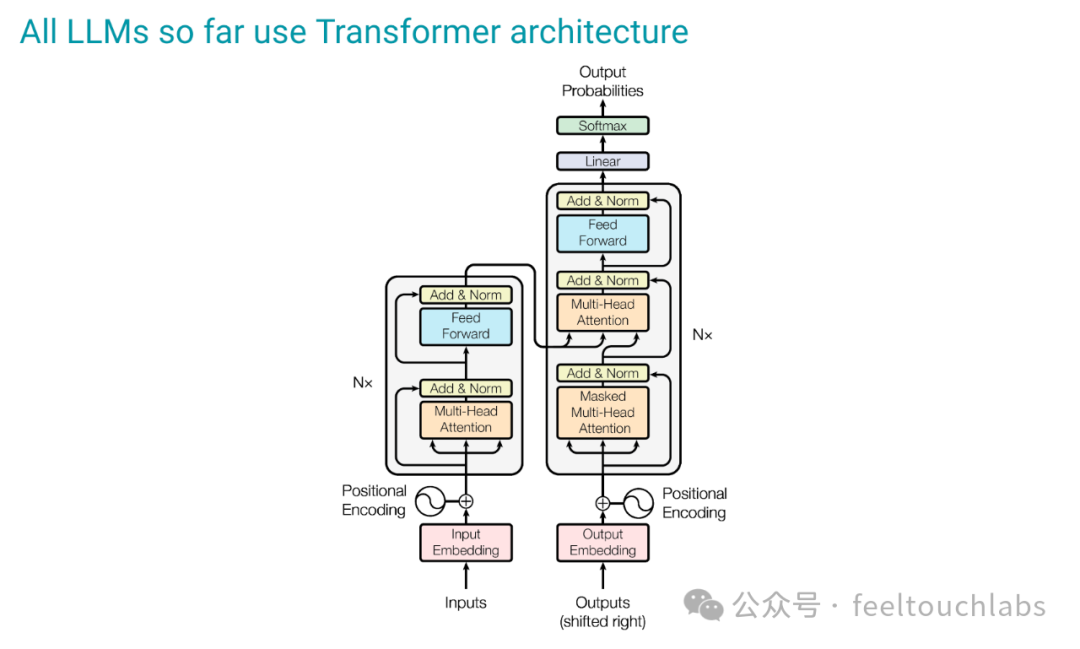

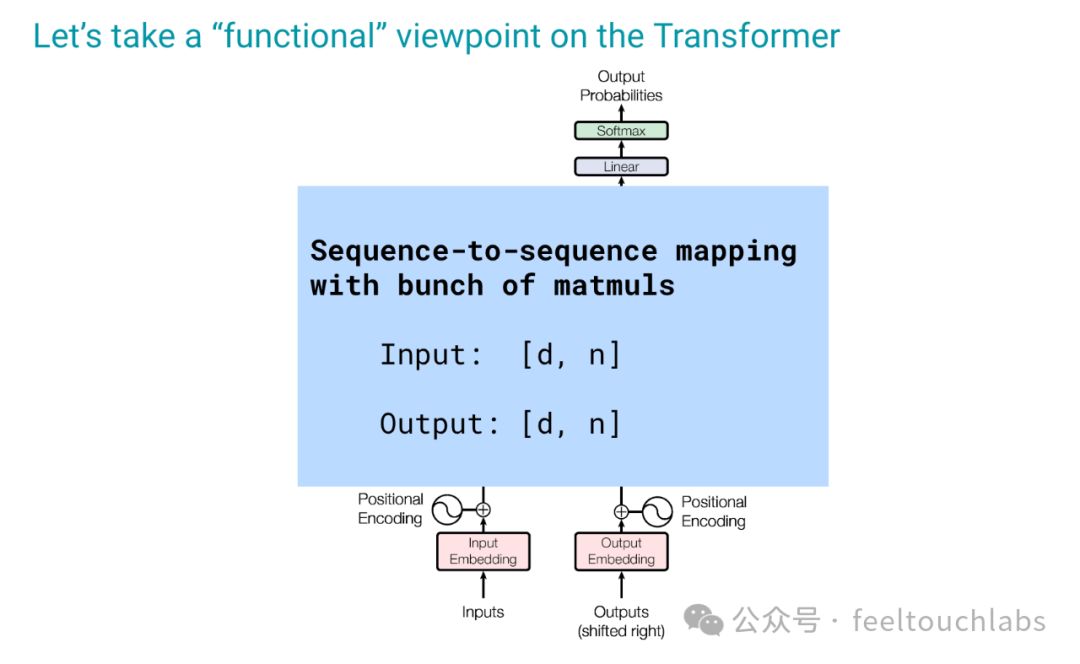

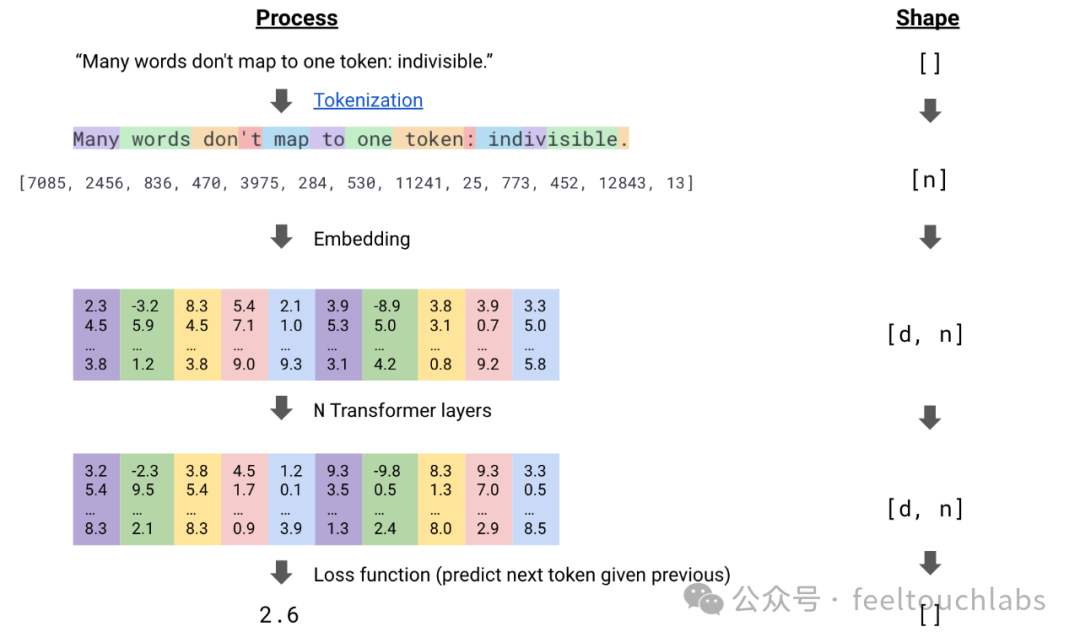

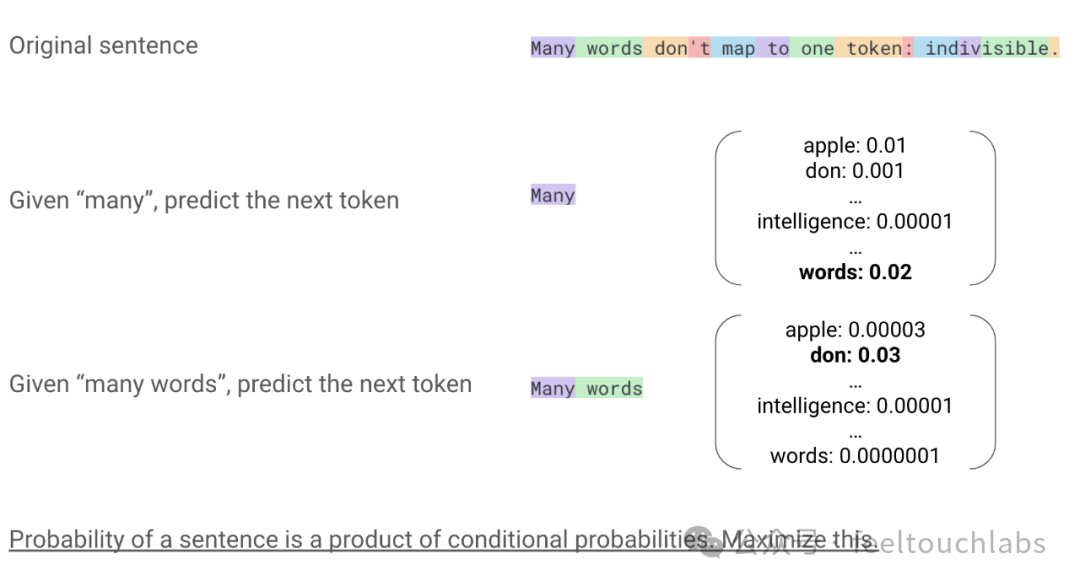

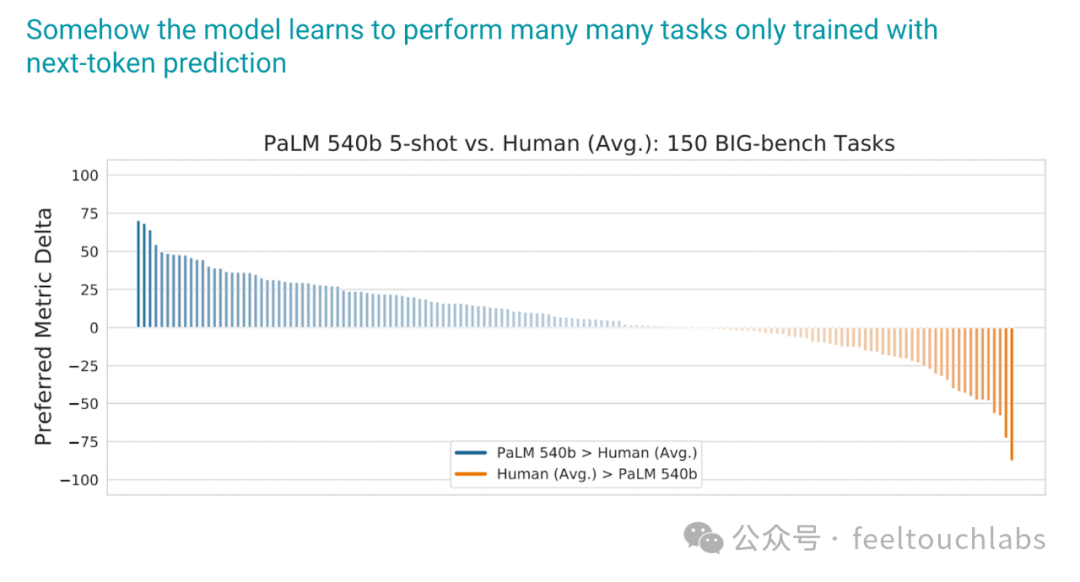

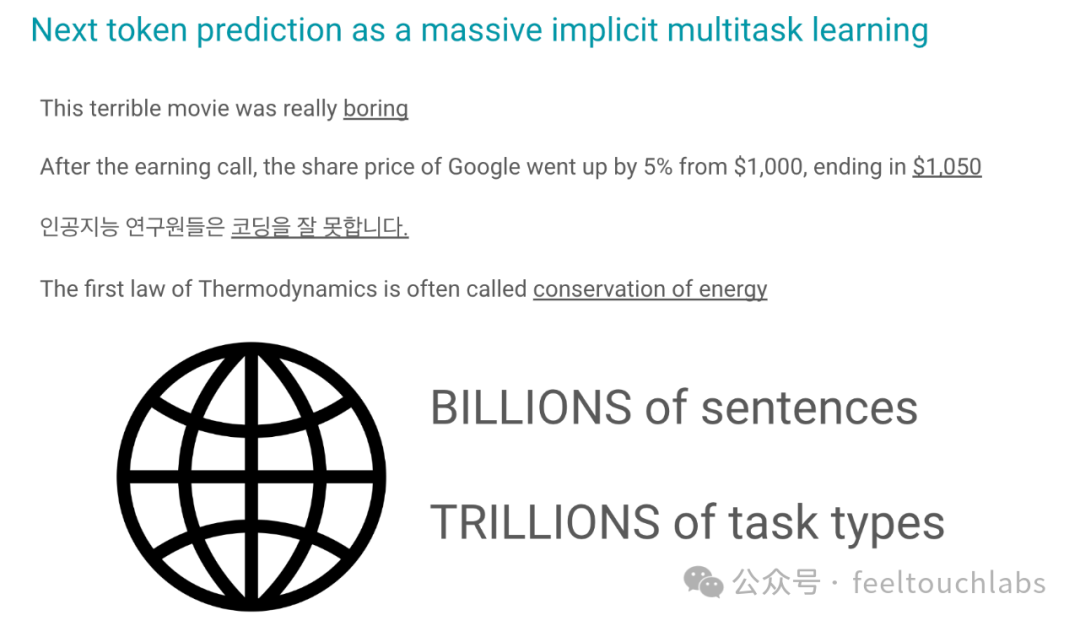

当前一代的 LLM 依赖于下一个标记预测,这可以被认为是学习推理等一般技能的弱激励结构

更一般地说,我们应该激励模型,而不是直接教授特定技能 新兴能力需要有正确的观点,例如忘记 |