|

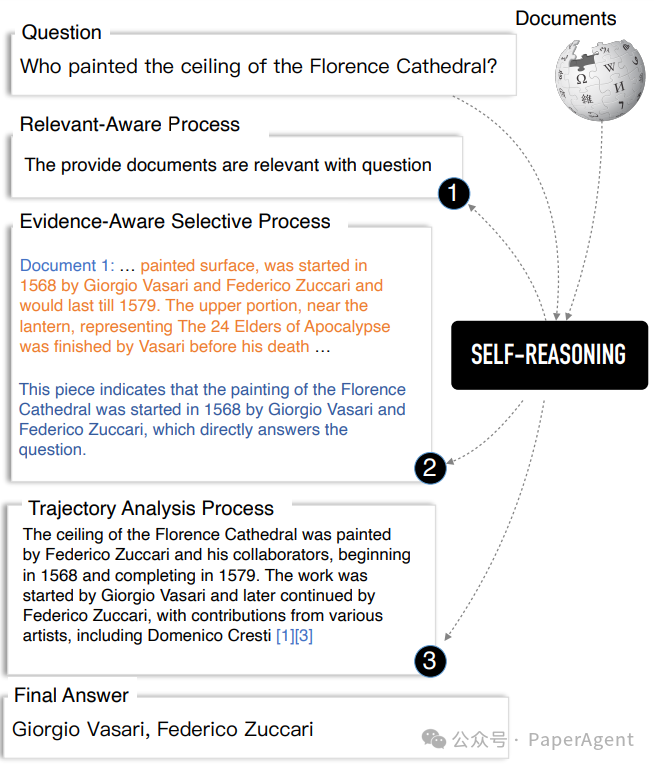

检索增强型语言模型(Retrieval-Augmented Language Model, RALM)通过在推理过程中整合外部知识,减轻了LLM固有的事实幻觉问题。然而,RALMs仍面临挑战:检索到的不相关文档可能导致无效的回答生成、生成的输出中缺乏适当的引用,使得验证模型的可信度变得复杂。一个示例,展示了百度自我推理(Self-Reasoning)框架如何通过相关意识过程、证据意识选择过程和轨迹分析过程生成推理轨迹。

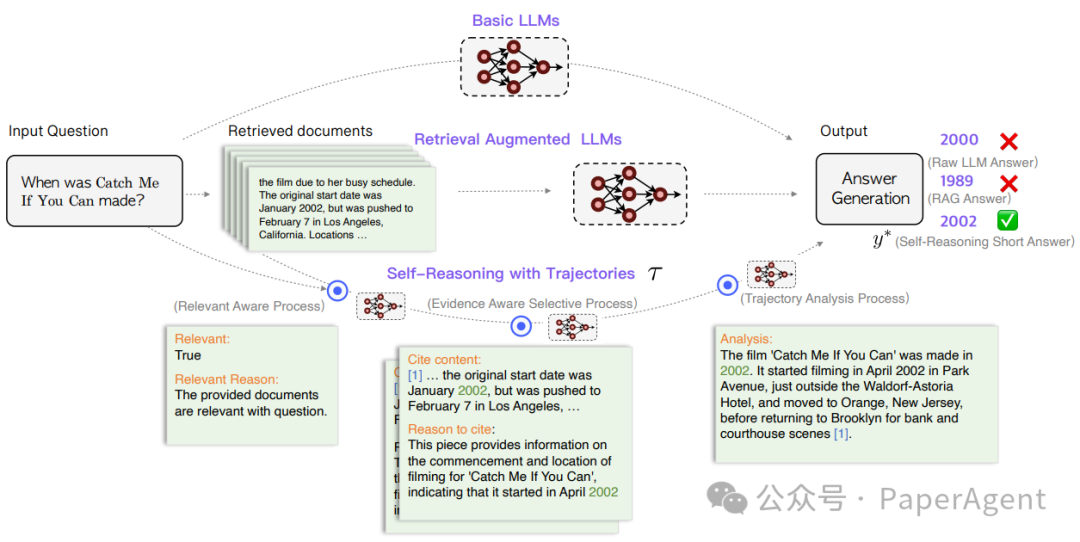

为了解决上述问题,百度(Baidu Inc.)提出了一种新颖的自我推理框架(SELF-REASONING framework),旨在通过LLM自身生成的推理轨迹来提高RALMs的可靠性和可追溯性。它的创新之处在于通过端到端的框架直接增强LLMs的性能,而无需依赖外部的推理模型,从而提供了一种更有效和可扩展的解决方案。对提高RALMs的自我推理框架的说明图。上层是基本的LLMs,它们通过固有知识回答问题。中层是标准的检索增强型语言模型,它们使用检索到的文档帮助回答问题。底层是百度的自我推理框架,它使用自生成的推理轨迹来输出答案。

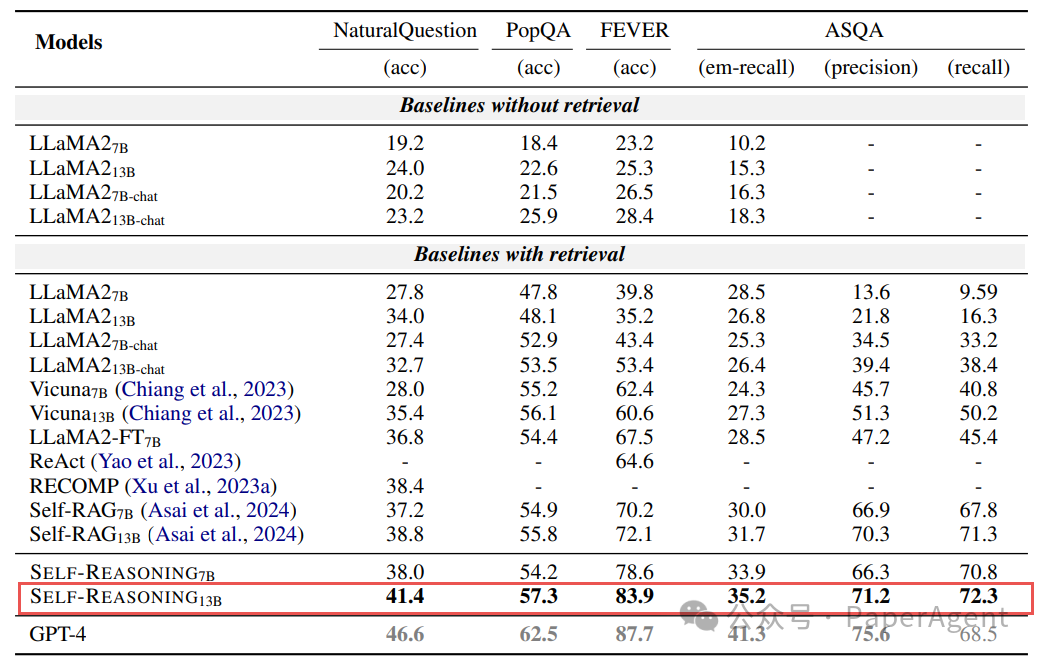

ingFang SC", miui, "Hiragino Sans GB", "Microsoft Yahei", sans-serif;letter-spacing: 0.5px;text-align: start;text-wrap: wrap;background-color: rgb(49, 49, 58);" class="list-paddingleft-1">相关性感知过程(Relevance-Aware Process, RAP) 选择默认的检索器(如DPR和Contriever)来检索与问题相关的文档。 指导模型评估检索到的文档与问题的相关性,并生成解释为何这些文档被识别为相关的输出。 如果所有检索到的文档都不相关,模型应依据预训练阶段获得的内部知识提供答案。 证据感知选择过程(Evidence-Aware Selective Process, EAP) 轨迹分析过程(Trajectory Analysis Process, TAP) 此外,还包括数据生成和质量控制的过程: 模型训练: 通过在四个公共数据集(两个短形式问答数据集、一个长形式问答数据集和一个事实验证数据集)上的评估,证明了该方法的优越性,能够超越现有的最先进模型,并且在仅使用2,000个训练样本的情况下,就能与GPT-4的性能相媲美。 在两个短形式问答数据集、一个长形式问答数据集和一个事实验证数据集上,与不同基线模型的性能比较。用粗体黑色表示的数字代表除了GPT-4之外的最佳结果。结果是基于五次运行的平均值,并且省略了标准差值(所有值均≤2%)。

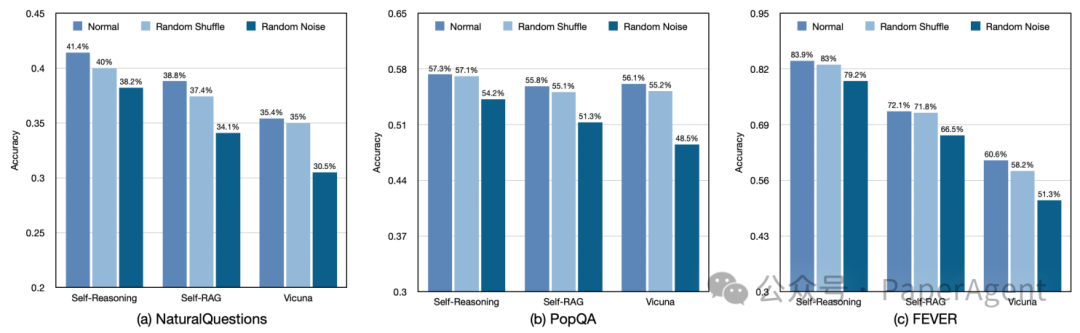

SELF-REASONING框架通过利用LLM自身生成的推理轨迹,在不依赖外部模型或工具的情况下,有效提高了RALMs的鲁棒性,并通过要求LLM明确生成文档片段和引用,增强了RALMs的可解释性和可追溯性。在三个不同的数据集上的噪声鲁棒性实验结果:(a) 左侧是NQ数据集,(b) 中间是PopQA数据集,(c) 右侧是FEVER数据集。Self-RAG和Vicuna是具有13B参数大小的模型。

ingFang SC", "Hiragino Sans GB", "Microsoft YaHei UI", "Microsoft YaHei", Arial, sans-serif;font-size: var(--articleFontsize);">附录:

GPT-4生成短形式和长形式问答任务的自我推理轨迹的Prompt模版 Instructions#RoleYouareanexperiencedexpert,skilledinansweringvariousquestions.#TaskPleaseanswerthequestionaccordingtotheprovidedreferenceevidenceasrequired.#ReferenceEvidence[1]RetrievedDocument{{DOCUMENT1}[2]RetrievedDocument{{DOCUMENT2}[3]RetrievedDocument{{DOCUMENT3}[4]RetrievedDocument{{DOCUMENT4}[5]RetrievedDocument{{DOCUMENT5}#Requirements1.First,pleasejudgewhethertheprovideddocumentsarerelevantwiththequestion,andputitintherelevantfield.Iftheprovidedcontentisirrelevanttothequestion,explainthereasonintherelevantreasonfield,thenyoucangivetheanswerwithyourinternalknowledge.2.Ifpossible,answerthequestioninpointsandprovideexplanations.3.Ifthecontentintheanswercomesfromdifferentpiecesofevidence,youneedtocitethesequencenumberoftheevidenceattheendofthesentence.Thecitationformatisshownbelow:[1],[1,3].4.Placeeachcitedpieceofevidenceinthecite_listfield,citecontentfieldtostoreeachparagraphofcitedcontent(omittedwordscanbereplacedby...),citereasonisusedtostoreyourthoughtsandanalysisofthiscontent,howthisparagraphcananswerthequestion.5.Putthelonganswercontentintheanalysisfield,andputtheshortanswer(nomorethan10words)intheanswerfield.#Question{{QUESTION}}GPT-4生成事实验证任务的自我推理轨迹的Prompt模版 Instructions#RoleYouareanexperiencedexpert,skilledinansweringvariousquestions.#TaskPleaseanswerthequestionaccordingtotheprovidedreferenceevidenceasrequired.#ReferenceEvidence[1]RetrievedDocument{{DOCUMENT1}}[2]RetrievedDocument{{DOCUMENT2}}[3]RetrievedDocument{{DOCUMENT3}}[4]RetrievedDocument{{DOCUMENT4}}[5]RetrievedDocument{{DOCUMENT5}}#Requirements1.First,pleasejudgewhethertheprovideddocumentsarerelevantwiththeclaim,andputitintherelevantfield.Iftheprovidedcontentisirrelevanttothequestion,explainthereasonintherelevantreasonfield,thenyoucangivetheanswerwithyourinternalknowledge.2.Ifpossible,answerthequestioninpointsandprovideexplanations.3.Ifthecontentintheanswercomesfromdifferentpiecesofevidence,youneedtocitethesequencenumberoftheevidenceattheendofthesentence.Thecitationformatisshownbelow:[1],[1,3].4.Placeputeachcitedpieceofevidenceinthelist,usecitecontentfieldtostoreeachparagraphofcitedcontent(omittedwordscanbereplacedby...),citereasonisusedtostoreyourthoughtsandanalysisofthiscontent,howthisparagraphcananswerthequestion.5.Putthelonganswercontentintheanalysisfield,andputtheshortanswer(SUPPORT/REFUTE/NOTENOUGHINFO)intheanswerfield.#QuestionYoushouldcheckwhetherthegivenclaimissupportedorrefutedbyabovetheevidence.Ifsupported,outputSUPPORT,ifcontroversial,outputREFUTE;iftheevidenceprovidedisnotenoughtomakeadecisionortheprovidedevidenceisirrelevant,outputNOTENOUGHINFO.{{CLAIM}}案例研究。展示了SELF-REASONING框架在推理过程中生成的自我推理轨迹,并展示了它们如何逻辑上生成正确答案。 QuestionWhen was catch me if you can made?

Retrieved document #1: The film due to her busy schedule. The original start date wasJanuary 2002, but was pushed to February 7 in Los Angeles, California. Locations includedBurbank, Downey, New York City, Quebec City and Montreal. …

Retrieved document #2: eventually turned to him for help in catching other check forgers.Development for the film started in 1980, but did not progress until 1997, when Spielberg'sDreamWorks bought the film rights to Abagnale's book. David Fincher, Gore Verbinski, LasseHallström, Miloš Forman, and Cameron Crowe had all been possible candidates for directorbefore Spielberg decided to direct. …

Retrieved document #3: It was funded independently and had a budget of $800,000. The filmwas Sommers's directorial debut and was written by him. When production finished, the studiothat had promised to distribute the movie had gone out of business, and it was eventuallyreleased by MCA Inc. in July 1989. The movie…(Other retrieved documents are omitted.)

Raw LLM Answer: 2000 ❌

Standard RAG Answer: 1989 ❌

Self-Reasoning Answer:(RelevantAwareProcess)Relevant: TrueRelevant Reason: The provided documents are relevant with question.(Evidence Aware Selective Process)

Cite content: [1] … the original start date was January 2002, but was pushed to February 7 inLos Angeles, …Reason to cite: This piece provides information on the commencement and location of filmingfor 'Catch Me If You Can', indicating that it started in April 2002

Cite content: [3] … When production finished, the studio that had promised to distribute themovie had gone out of business, and it was eventually released by MCA Inc. in July 1989., …Reason to cite: This piece provides information on the release date of 'Catch Me If You Can',indicating that it was eventually released by MCA Inc. in July.(Trajectory Analysis Process)

Analysis:(long answer)The film 'Catch Me If You Can' was made in 2002. It started filming in April 2002 in Park Avenue,just outside the Waldorf-Astoria Hotel, and moved to Orange, New Jersey, before returning toBrooklyn for bank and courthouse scenes [1].

Final Answer:2002 ✅ (short answer)

https://arxiv.org/pdf/2407.19813ImprovingRetrievalAugmentedLanguageModelwithSelf-ReasoningBaiduInc.,China.

|